QRC2024

This Course is hosted at the

ETTORE MAJORANA FOUNDATION AND CENTRE FOR SCIENTIFIC CULTURE

FOUNDER: A. ZICHICHI

as part of the

INTERNATIONAL SCHOOL ON NONEQUILIBRIUM PHENOMENA

Directors of the School: A. Lanzara, G. M. Palma, B. Spagnolo

Working Party on Quantum Reservoir Computing

Directors of the Course: S. Lorenzo, G. M. Palma, M. Paternostro

Dates: 15 - 18 October 2024

The aim of the course is to discuss the current understanding of quantum reservoir computing and to develop characterization tools for tum computation and process identification. The features of specific platforms currently being explored for these goals will be considered, as well as impact of noise and thermodynamic constraints.

The working party will discuss the range of tasks that can be efficiently solved by QRC and, bly, the combination of analytical and numerical approaches, including the exploitation of quantum simulation on quantum computing platforms.

If you want to join us, please REGISTER.

Program

Tomasz Paterek

Quantum Reservoir Processing

Oliver Neill

Photon number resolved quantum reservoir computing

Enrico Prati

Quantum machine learning and the exploitation of noise

as a computational resource

Valeria Cimini

Experimental realization of

photonic quantum extreme learning machines

Andrea Smirne

Quantumness in sequential measurements:

coherences, correlations and quantum combs

Marco Vetrano

QELM response to scrambling dynamics and

applications to atmospheric retrieval

Marco Miceli

An astrophysical problem:

can we study stellar explosions through QRC?

Lunch

Antonio Sannia

Dissipative Quantum Reservoir Computers

Gabriele Lo Monaco

QELM for quantum chemistry and biological tasks

Alessandro Ferraro

Quantum resources and classical simulation

in continuous-variable circuits.

Sofia Sgroi

Designing fully connected quantum networks

for optimal excitation-transfer

Dario Tamascelli

Spectral Density Modulation and Universal

Markovian Closure of Structured Environments

Davide Rattacaso

Quantum circuit compilation with quantum computers

Francesco Monzani

Universality conditions of unified classical and

quantum reservoir computing

Jacopo Settino

Memory-Augmented Hybrid Quantum Reservoir Computing

Iris Agresti

Experimental quantum machine learning on photonic platforms

Alessandro Romancino

Entanglement Percolation in Random State Quantum Networks

Alessandra Colla

Thermodynamic role of general environments:

from heat bath to work reservoir

Luca Innocenti

Connections Between QELM, QRC, and Shadow Tomography

Marco Pezzutto

Non-positive energy quasidistributions

in coherent collisional models

Oliver Neill's Talk

Title: Photon number resolved quantum reservoir computing

Abstract: Quantum computers promise huge increases in computational performance on specific tasks. However, a large gap has developed between our theoretical understanding of quantum information processing, and our current experimental capabilities, as efforts to build scalable hardware with acceptable noise and error rates continue. Here, we propose a new method for performing application-specific computing using quantum resources which aims to be practical, scalable, and implementable with existing commercial hardware. In particular, we use the infinite dimensional Hilbert space generated by multiphoton interference and photon number resolved detection (PNRD) to create a reservoir computer with rich dynamics, while considering realistic quantum state preparation, losses, and detection efficiencies. We analyse this system analytically and through simulations, highlighting the role of PNRD as a powerful quantum resource.

Francesco Monzani's Talk

Title: Universality conditions of unified classical and quantum reservoir computing

Abstract: The power of many neural network architectures resides in their universality approximation property. As widely known, many classes of reservoir computers serve as universal approximators of functionals with fading memory. In this talk, we revise several case-specific results on the universality of classical and quantum reservoir computers, trying to enlighten the common features. This yields a ready-made simplified mathematical setting for ensuring the efficacy of a given reservoir architecture. Then, we test our framework by showing that noisy quantum channels can be leveraged to ensure the universality of a gate-based echo state network.

Marco Pezzutto's Talk

Title: Non-positive energy quasidistributions in coherent collisional models

Abstract: Collisional models have proven to be insightful tools for investigating open quantum system dynamics. Recently, they were applied to the scenario where a system interacts with elements of an environment prepared in a state with quantum coherence, given by the mixture of a coherent Gibbs state and an equilibrium Gibbs one. In the short system-environment interaction time limit, a clear formulation of the first and second laws of thermodynamics emerges, including also contributions due to the presence of quantum coherence. Here we go beyond the short interaction time limit and energy-preserving condition, obtaining the distributions of the stochastic instances of internal energy variation, coherent work, incoherent heat, and mechanical work. We show that the corresponding Kirkwood-Dirac Quasiprobability distributions (KDQ) can take non-commutativity into account, and return the statistical moments of the distributions, in a context where the two-point measurement scheme would not be adequate. We certify the conditions under which the collision process exhibits genuinely quantum traits, using as quantumness criteria the negativity of the real part of a KDQ, and the deviations from zero of its imaginary part. We test our results on a qubit case-study, amenable to analytical treatment as far as the KDQ for thermodynamic quantities are concerned, whereas the full qubit dynamics over repeated collisions is accessible numerically. Our work represents an instance where thermodynamics can be extended beyond thermal systems, as well as an example of application of KDQ distributions in a thermodynamic context where quantum effects play a significant role.

Marco Miceli's Talk

Title: An astrophysical problem: can we study stellar explosions through QRC?

Abstract:

Jacopo Settino's Talk

Title: Memory-Augmented Hybrid Quantum Reservoir Computing

Abstract: Reservoir computing (RC) is an effective method for predicting chaotic systems by using a high-dimensional dynamic reservoir with fixed internal weights, while keeping the learning phase linear, which simplifies training and reduces computational complexity compared to fully trained recurrent neural networks (RNNs).

Quantum reservoir computing (QRC) uses the exponential growth of Hilbert spaces in quantum systems, allowing for greater information processing, memory capacity, and computational power. However, the original QRC proposal requires coherent injection of inputs multiple times, complicating practical implementation.

We present a hybrid quantum-classical approach that implements memory through classical post-processing of quantum measurements. This approach avoids the need for multiple coherent input injections and is evaluated on benchmark tasks, including the chaotic Mackey-Glass time series prediction. We tested our model on two physical platforms: a fully connected Ising model and a Rydberg atom array. The optimized model demonstrates promising predictive capabilities, achieving a higher number of steps compared to previously reported approaches.

Valeria Cimini's Talk

Title: Experimental realization of photonic quantum extreme learning machines

Abstract: In the context of quantum machine learning, quantum reservoir computing and its simplified version, often referred to as extreme learning machines, are emerging as highly promising approaches for near-term applications on noisy intermediate-scale quantum (NISQ) devices. In the classical domain, photonic systems have proven to be among the leading platforms for neuromorphic computing, exhibiting high scalability, fast information processing, and energy efficiency. Photonic neuromorphic architectures have demonstrated strong potential in tasks ranging from classification to dynamic signal processing, making them ideal candidates for classical and quantum information processing.

Building on this foundation, we explore how quantum photonic platforms can be exploited to address both quantum and classical computational tasks efficiently. On the quantum side, we demonstrate how the dynamics of a single-photon quantum walk in high-dimensional photonic orbital angular momentum space can be used for the robust and resource-efficient reconstruction of quantum properties without requiring precise characterization of the measurement apparatus. This process is resilient to experimental imperfections, offering a reliable method for quantum state characterization.

On the classical side, we prove that multi-mode squeezed states can be applied to solve standard classification tasks. In this scenario, we highlight how the presence of quantum correlations in the reservoir leads to enhanced performance compared to systems using classical light. Specifically, we demonstrate that increasing the strength of quantum correlations and the dimensionality of the quantum system brings improved performance in standard classification tasks, surpassing the capabilities of classical optical reservoirs and digital classifiers.

Andrea Smirne's Talk

Title: Quantumness in sequential measurements: coherences, correlations and quantum combs

Abstract: More than a century after the advent of quantum theory, identifying which properties and phenomena are fundamentally quantum remains a central and elusive challenge across various fields of investigation. How can we witness the role of quantum coherence in biological phenomena? Are there distinct behaviors associated with the principles of thermodynamics when we move to the microscopic realm? What quantum features guarantee advantages when quantum computational protocols are performed instead of classical ones? In this talk, I will discuss to what extent non-classicality can be linked to quantum coherences and quantum correlations when considering an open quantum system undergoing sequential measurements at different times. In particular, I will highlight the critical distinction between Markovian and non-Markovian processes in this context. While Markovian processes can be effectively addressed using standard quantum dynamical maps—establishing a direct link between non-classical statistics and quantum coherence dynamics—non-Markovian processes require a more comprehensive framework: quantum combs. After briefly explaining how quantum combs work in a simple case study, I will show how they allow to characterize in full generality those multi-time statistics that are properly quantum and to link them with a specific property of the system-environment correlations.

Alessandro Romancino's Talk

Title: Entanglement Percolation in Random State Quantum Networks

Abstract: Quantum information shows that entanglement is a crucial resource for applications that go beyond classical capabilities. Thanks to quantum correlations, protocols such as secure quantum cryptography, dense coding, and quantum teleportation are now possible to implement on various scales. However, entanglement at a distance remains a challenging experimental endeavour. To address this issue, entanglement distribution employs different methods like quantum repeaters and entanglement distillation. Another technique, inspired by statistical physics, exploits the features of a quantum network of partially entangled states. This protocol, called entanglement percolation, uses local operations and classical communication (LOCC) and entanglement swapping to create a maximally entangled state between two arbitrary nodes in the network. This problem can be perfectly mapped onto the problem of percolation theory and can be simulated effectively with classical network theory. The classical entanglement percolation (CEP) threshold measures the initial amount of entanglement needed at the beginning to ensure the creation of the desired singlet. This approach has been shown to be, in general, suboptimal, and an improvement called quantum entanglement percolation (QEP) has been developed by first preparing the quantum network using local quantum operations only. Still, it is already known that this protocol is also not optimal, and finding it is still an open question. Different aspects of the problem have also been tackled, such as multipartite entanglement and mixed states. A deterministic protocol based on concurrence has also shown promising results. In our work we have generalized the problem by relaxing the original assumption that the initial states are fixed. By using random states and results from extreme value theory we have found out that only the average initial entanglement is important for entanglement distribution purposes and, in general, the QEP protocol can be worse than the CEP protocol in this more realistic scenario.

Antonio Sannia's Talk

Title: Dissipative Quantum Reservoir Computers

Abstract: In this talk, we will discuss the role of dissipative phenomena in quantum reservoir computing. Traditionally seen as a limitation, dissipation is reinterpreted as a powerful tool that, when engineered correctly, enhances the computational abilities of quantum systems. By introducing continuous, tunable dissipation into quantum spin networks, we demonstrate significant improvements in time series processing, in both the Markovian and non-Markovian regimes. Moreover, we will demonstrate that the Liouvillian skin effect is present in this machine-learning contest. In particular, our findings indicate that boundary conditions can significantly impact the performance of quantum reservoir computers when subjected to dissipative drivings.

Gabriele Lo Monaco's Talk

Title: Quantum extreme learning machine for quantum chemistry and biological tasks

Abstract: Among the paradigms of quantum supervised machine learning, quantum reservoir computing, and specifically the quantum extreme learning machine (QELM), has emerged as some of the most promising approaches. The notable advantages of QELM lie in the simplicity of its optimization routine which require minimal resources. Classical data are encoded in the quantum state of input qubits, with the choice of encoding determining model’s expressivity. The information is distributed across a reservoir interacting with the encoding qubits through a fixed scrambling dynamics that does not require any optimization during training. The reservoir is then measured and the outcomes are collected on a classical computer for post-processing, the optimization step, involving a simple linear regression to fit the target data.

We present the first implementation of QELM on NISQ devices with applications to quantum chemistry and biological tasks of practical interest. We use QELM to reconstruct the potential energy surface of various molecules, mapping the geometry of a molecular species to its energy. Our setup outperforms classical neural networks and other quantum routines such as VQE, while minimizing quantum resource costs. It is scalable and has controlled depth, making it suitable for practical implementation on real hardware. The implementation performed on IBM Quantum platforms yielded encouraging results, with the average error close to chemical accuracy and no need for error mitigation.

In the biological domain, we employ QELM as a support vector machine for protein classification on large experimental datasets. A practical example is classifying proteins based on their interaction with angiotensin enzyme, predicting peptides with anti-hypertensive effects that could contribute to cardiovascular health. The proteins are described in terms of 40 descriptors of biological relevance, such as aromaticity or hydrophobicity, a large number of input features presenting a challenge and benchmark for the current status of QELM. This second application confirms the feasibility of QELM and manifests an interesting resistance to statistical noise and decoherence, suggesting its potential for imminent applications in industrial pharmacology.

Alessandro Ferraro's Talk

Title: Quantum resources and classical simulation in continuous-variable circuits.

Abstract: Determining the evolution of multiple interacting quantum systems is notoriously challenging, a difficulty that underpins the anticipated quantum advantage in computing. In the context of continuous-variable systems, Wigner negativity -- recognized as a genuine quantum feature from a fundamental standpoint -- serves as a valuable resource in computational models based on Gaussian evolutions. However, I will present scenarios demonstrating how classical devices can efficiently simulate the evolution of certain highly Wigner-negative resources. This finding naturally leads to the concept of excess Wigner negativity, which is useful for quantifying non-stabilizerness (or magic) in qubit-based quantum computation. Furthermore, it facilitates the development of a strategy to determine whether a given state can be regarded as a resource, thereby allowing to establish the first known sufficient condition for assessing the computational resourcefulness of continuous-variable states.

Sofia Sgroi's Talk

Title: Designing fully connected quantum networks for optimal excitation-transfer

Abstract: Boosted by the unprecedented interest towards quantum information technologies, the study of the properties of complex networks in the quantum domain has received a great deal of attention, due to its broad range of applicability. Modeling complex quantum phenomena in terms of simple quantum systems is not sufficient to capture a plethora of interesting problems belonging to different fields, ranging from quantum communication, transport phenomena in nanostructures, to quantum biology. Despite their apparent differences, such phenomena face similar theoretical challenges. In particular, they require a deeper understanding of the role played by the geometry and topology in the properties of the network itself, as well as its optimal functionality.

In this talk, we address the problem of identifying network configurations compatible with optimal transport performances. However, attacking this multifaceted issue from the most general standpoint would be a formidable task. Here we focus on two distinct scenarios. First, we consider a specific model of open quantum network, whose features have been extensively studied due to its ability to to effectively describe the phenomenological dynamics of the Fenna–Matthews–Olson (FMO) protein complex. We systematically optimize its local energies without changing the interaction strengths to achieve high excitation transfer for various environmental conditions, using an adaptive Gradient Descent technique and Automatic Differentiation.Then, we address the problem of optimal chain design in terms of geometrical configuration, this time effectively focusing on distance dependent, site-to-site interactions. We propose a bottom-up approach, based on Reinforcement Learning, to the design of a chain achieving efficient excitation-transfer performances.

Dario Tamascelli's Talk

Title: Spectral Density Modulation and Universal Markovian Closure of Structured Environments

Abstract: The combination of chain-mapping and tensor-network techniques provides a powerful tool for the numerically exact simulation of open quantum systems interacting with structured environments. However, these methods suffer from a quadratic scaling with the physical simulation time, and therefore they become challenging in the presence of multiple environments. In this talk we first illustrate how a thermo-chemical modulation of the spectral density allows replacing the original environments with equivalent, but simpler, ones. Moreover, we show how this procedure reduces the number of chains needed to model multiple environments. We then provide a derivation of the Markovian closure construction, consisting of a small collection of damped modes undergoing a Lindblad-type dynamics and mimicking a continuum of bath modes. We describe, in particular, how the use of the Markovian closure allows for a polynomial reduction of the time complexity of chain-mapping based algorithms when long-time dynamics are needed.

Davide Rattacaso's Talk

Title: Quantum circuit compilation with quantum computers

Abstract: Compilation optimizes quantum algorithms performances on real-world quantum computers. To date, it is performed via classical optimization strategies, but its NP-hard nature makes finding optimal solutions difficult. We introduce a class of quantum algorithms to perform compilation via quantum computers, paving the way for a quantum advantage in compilation. We demonstrate the effectiveness of this approach via Quantum and Simulated Annealing-based compilation: we successfully compile a Trotterized Hamiltonian simulation with up to 64 qubits and 64 time-steps and a Quantum Fourier Transform with up to 40 qubits and 771 time steps. Furthermore, we show that, for a translationally invariant circuit, the compilation results in a fidelity gain that grows extensively in the size of the input circuit, outperforming any local or quasi-local compilation approach.

Alessandra Colla's Talk

Title: Thermodynamic role of general environments: from heat bath to work reservoir

Abstract: Environments in quantum thermodynamics usually take the role of heat baths. These baths are Markovian, weakly coupled to the system, and initialized in a thermal state. Whenever one of these properties is missing, standard quantum thermodynamics is no longer suitable to treat the thermodynamic properties of the system that result from the interaction with the environment. Using a recently proposed framework for open system quantum thermodynamics at arbitrary coupling regimes, we show that within the very same model (a Fano-Anderson Hamiltonian) the environment can take three different thermodynamic roles: a standard heat bath, exchanging only heat with the system, a work reservoir, exchanging only work, and a hybrid environment, providing both types of energy exchange. The exact role of the environment is determined by the strength and structure of the coupling, and by its initial state.

Iris Agresti's Talk

Title: Experimental quantum machine learning on photonic platforms

Abstract: The past decades have witnessed a swift development in the field of quantum-based technologies. In particular, a strong interest has been devoted to quantum computation, which promises to outperform its classical counterpart. However, a quantum advantage has been only demonstrated for problems with no practical applications and it is still an open question whether the same can happen also for useful tasks. Even translating classical protocols to quantum hardware constitutes a challenge. This is the reason for which a flurry of interest was attracted by the combination of machine learning and quantum computation. The hardest challenge to implement machine learning models on quantum hardware consists in the implementation of nonlinear behaviors, which are essential for an effective learning process. This is not trivial to achieve, as, on one hand, closed quantum systems have a linear evolution, while open systems tend to lose coherence. In this talk, we will go through possible ways of overcoming this apparent conundrum and we will show two experimental implementations of machine learning models: one exploiting the nonlinearity coming from a suitable encoding of the data and the second exploiting a feedback loop, through the use of the photonic quantum memristor. In the first example, we implement an instance of quantum kernel, where the idea is to map nonlinearly separable data from an initial space to a higher dimensional one (the so-called feature space), where they become classifiable through a hyperplane. In this work, we show that a mapping exploiting the sampling of the output statistics of the unitary evolution of a Fock state solves given tasks with a higher accuracy than standard kernel methods. In the second example, we implement a quantum reservoir computing model, where a quantum nonlinear substrate equipped with memory is implemented through the photonic quantum memristor. This allows to achieve sufficient nonlinearity and short-term memory to reproduce and predict nonlinear functions and time series.

Marco Vetrano's Talk

Title: Quantum Extreme Learning response to scrambling dynamics and applications to atmospheric retrieval

Abstract: Quantum extreme learning machines (QELMs) leverage untrained quantum dynamics to efficiently process information encoded in input quantum states, avoiding the high computational cost of training more complicated nonlinear models. On the other hand, quantum information scrambling (QIS) quantifies how the spread of quantum information into correlations makes it irretrievable from local measurements.

We will explore the tight relation between QIS and the predictive power of QELMs by showing that a state estimation task can be solved even beyond scrambling time. These results offer promising venues for robust experimental QELM-based state estimation protocols, as well as providing novel insights into the nature of QIS from a state estimation perspective.

QELM has already shown promising results in the context of quantum state properties reconstruction, quantum chemistry and more. In this context, we are going to review some preliminary results of applications of QELM in the field of astrophysics. In particular, we are going to show how QELM can be capable to extract features from clean and noisy spectral data of exoplanets in order to retrieve atmospheric parameters such as the chemical composition and the radius of an exoplanet.

Tomasz Paterek's Talk

Title: Quantum Reservoir Processing

Abstract: The basic idea of reservoir processors is to conduct simple measurements on nodes of uncharacterized networks and post-process their outcomes (train) to achieve a specific task. In the quantum version, the reservoir network is composed of quantum bits and, accordingly, one can feed it with quantum states and consider quantum outputs. We will review our works on quantum reservoir processors (QRP) where we allowed the input and output to be classical or quantum. For example, for quantum input and classical output, the QRP device can perform quantum tomography or entanglement estimation, for classical input and quantum output, the QRP can prepare a range of interesting states by being pumped with coherent states followed by linear optics, and for quantum inputs and outputs, the device can execute quantum computations through closed and open system evolutions. We will report on an experimental implementation of some of these ideas.

Luca Innocenti's Talk

Title: Connections Between Quantum Extreme Learning Machines, Quantum Reservoir Computing, and Shadow Tomography

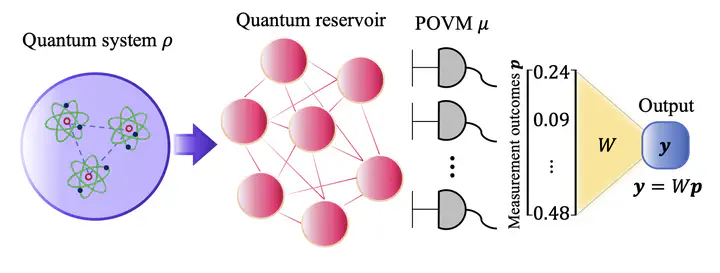

Abstract: Quantum extreme learning machines (QELMs) and quantum reservoir computing (QRC) aim to efficiently post-process the outcomes of fixed—often uncalibrated—quantum devices to estimate properties of quantum states and solve related tasks. Shadow tomography, on the other hand, provides a methodology for estimating these properties without the resource scaling inherent in traditional tomography, utilizing measurement frames and the concept of dual measurements.

In this work, we present a unified framework that bridges QELMs, QRC, and shadow tomography by modeling them through effective measurements derived from general Positive Operator-Valued Measures (POVMs). We demonstrate that QELMs and QRCs can be concisely described via single effective measurements, providing an explicit characterization of the information retrievable with such protocols. Additionally, we show that the training process in QELMs closely parallels the reconstruction of effective measurements characterizing a given device.

Our approach reveals deep connections between these methodologies, highlighting how both can be viewed as quantum estimation techniques differing only in their assumptions about prior knowledge of the measurement apparatus. By leveraging the formalism of measurement frames, we examine the interplay between measurements, reconstructed observables, and estimators. This unified perspective paves the way for a more thorough understanding of the capabilities and limitations of QELMs, QRCs, and shadow tomography, potentially enhancing quantum state estimation methods to be more resilient to noise and imperfections while avoiding the dimensionality issues of traditional tomography.

Enrico Prati's Talk

Title: Quantum machine learning and the exploitation of noise as a computational resource

Abstract: